Investing in SEO Crawl Budget to Increase the Value of SEO Actions

Discussions about crawl budget often either spark debates or sound too technical. But making sure your crawl budget fits your website’s needs is one of the most important boosts you can give your SEO.

Why invest in a healthier crawl budget?

SEO functions on one basic principle: if you can provide a web page that best fulfills Google’s criteria for answers for a given query, your page will appear before others in the results and be visited more often by searchers. More visits mean more brand awareness and more marketing leads for sales and pre-sales to process.

This principle assumes that Google is able to find and examine your page in order to evaluate it as a potential match for search queries. This happens when Google crawls and indexes your page. A perfectly optimized page that is never crawled by Google will never be presented in the search results.

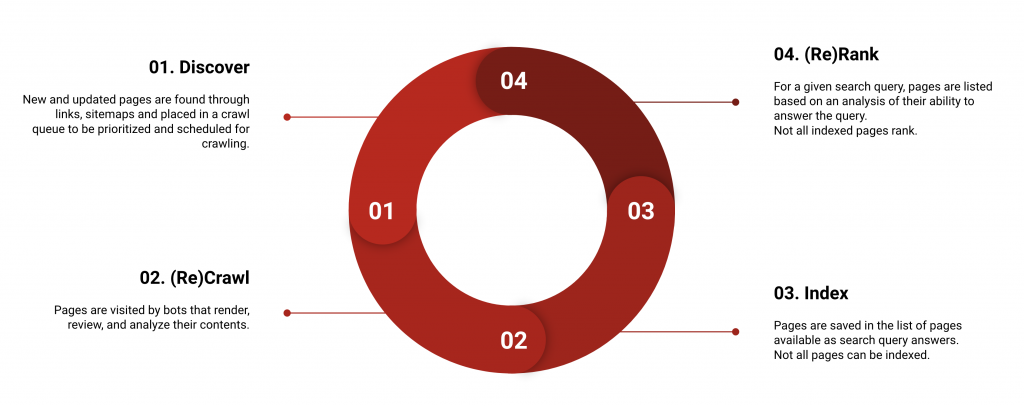

The search engine process for finding pages and displaying them in search results.

In short: Google’s page crawls are a requirement for SEO to work.

A healthy crawl budget ensures that the important pages on your site are crawled in a timely fashion. An investment in crawl budget, therefore, is an essential investment in an SEO strategy.

What is crawl budget?

“Crawl budget” refers to the number of pages on a website that a search engine discovers or explores in within a given time period.

Crawl budget is SEO’s best attempt to measure abstract and complex concepts:

- How much attention does a search engine give your website?

- What is your website’s ability to get pages indexed?

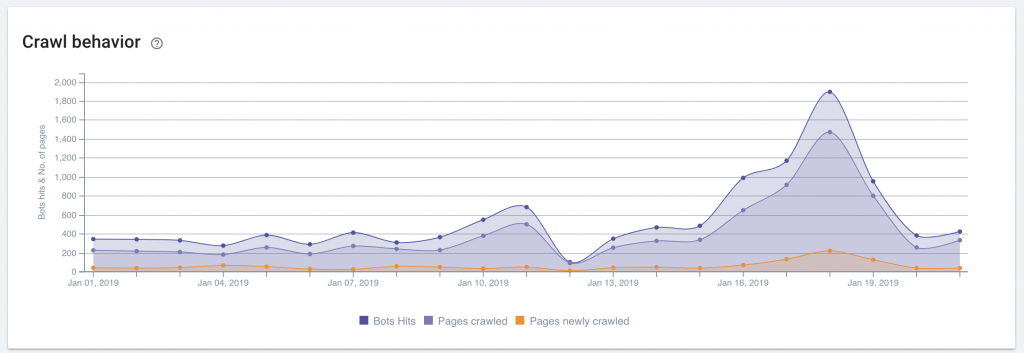

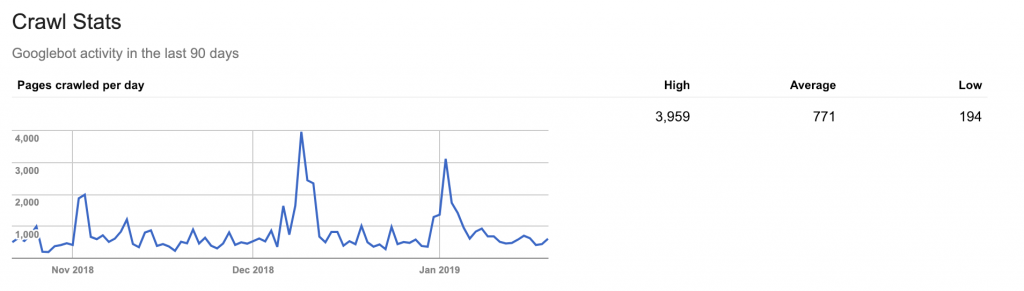

Graphical representation of daily googlebot hits on a website.

How much budget do I have?

The term “budget” is controversial, as it suggests that search engines like Google set a number for each site, and that you as an SEO ought to be able to petition for more budget for your site. This isn’t the case.

From Google’s point of view, crawling is expensive, and the number of pages that can be crawled in a day is limited. Google attempts to crawl as many pages as possible on the web, taking into account popularity, update frequency, information about new pages, and the web server’s ability to handle crawl traffic, among other criteria.

Since we have little direct influence on the amount of budget we get, the game becomes one of how to direct Google’s bots to the right pages at the right time.

No, really. How much crawl budget do I have?

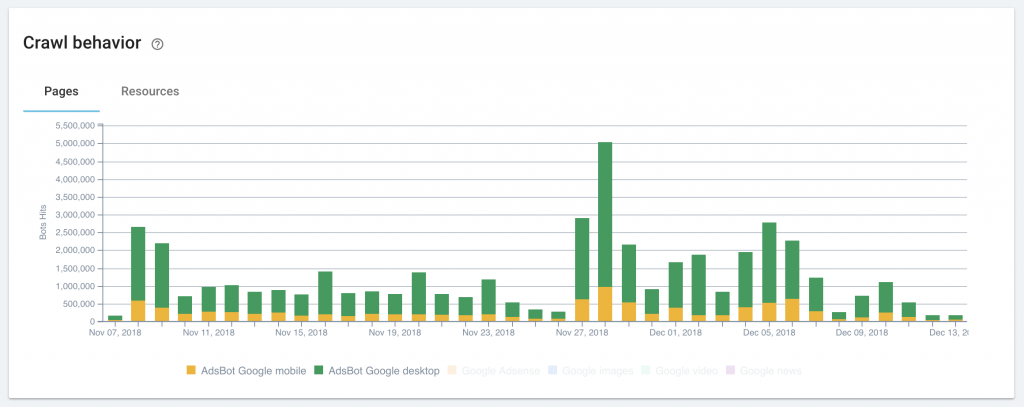

The best way to determine how many times Google crawls your website’s URLs per day is to monitor googlebot hits in your server logs. Most SEOs take into account all hits by Google bots related to SEO and exclude bots like AdsBot-Google (which verifies the quality and pertinence of a page used in a paid campaign).

Visits by Google’s AdsBot that should be removed from an SEO crawl budget.

Because spammers often spoof Google bots to get access to a site, make sure you validate the IPs of bots that present as googlebots. If you use the log analyzer available in OnCrawl, they do this step for you.

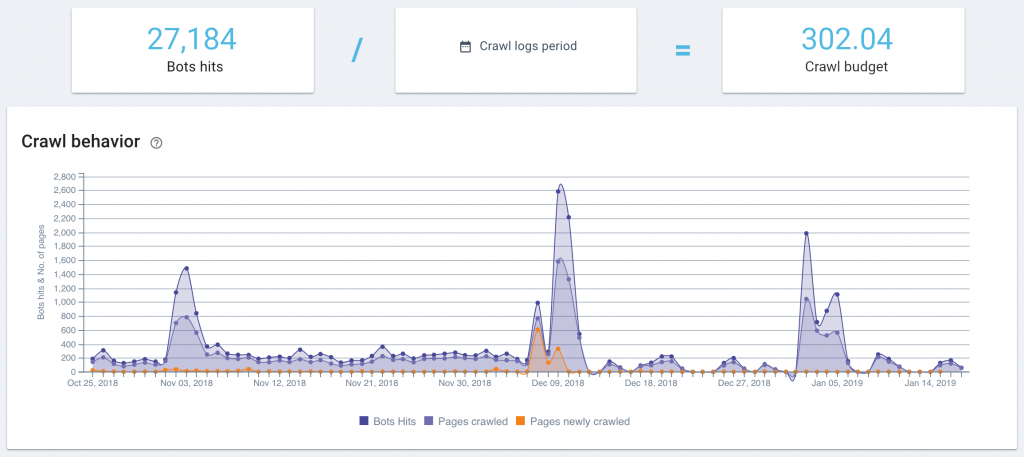

Take the sum of the hits over a period of time and divide it by the number of days in that period. The result is your daily crawl budget.

If you can’t obtain access to your server logs, you can currently still use the old Google Search Console to get an estimate. The Google Search Console data on crawl rates provides a single “daily average” figure that includes all Google bots. This is your crawl budget (it will be inflated by the inclusion of additional bots).

Managing crawl budget by prioritizing quality URLs

Since you can’t control the amount of budget you get, making sure your budget is spent of valuable URLs is very important. And if you’re going to spend your crawl budget on optimal URLs, the first step is to know which URLs are worth the most on your site.

As obvious as it sounds, you will want to use your budget on the pages that can earn the most visits, conversions and revenue. Don’t forget that this list of pages may evolve over time or with seasonality. Adapt these pages to make them more accessible and attractive to bots.

Bots are most likely to visit pages with a number of qualities:

- General site health: pages on a website that is functional, able to support crawl requests without going down, reasonably rapid, and reliable; it is not spam and has not been hacked

- Crawlability: pages receive internal links, respond when requested, and aren’t forbidden to bots

- Site architecture: pages are linked to from topic-level pages and thematic content pages link to one another, using pertinent anchor text

- Web authority: pages are referenced (linked to) from qualitative outside sources

- Freshness: pages are added or updated when necessary, and page are linked to from new pages or pages with fresh content

- Sitemaps: pages are found in XML sitemaps submitted to the search engine with an appropriate <lastmod> date

- Quality content: content is readable and responds to search query intent

- Ranking performance: pages rank well but are not in the first position

If this list looks a lot like your general SEO strategy, there’s a reason for that: quality URLs for bots and quality URLs for users have the nearly identical requirements, with an extra focus on crawlability for bots.

Stretching your crawl budget to cover essentials

You can stretch your crawl budget to cover more pages, just like you can stretch a financial budget.

Cut unnecessary spending

A first level of unnecessary spending concerns any googlebot hits on pages you don’t want to show up in search results. Duplicate content, pages that should be redirected, and pages that have been removed all fall into this category. You may also want to include, for example, confirmation pages when a form is successfully sent, or pages in your sign-up tunnel, as well as test pages, archived pages and low-quality pages.

If you have prioritized your pages, you can also include pages with no or very low priority in this group.

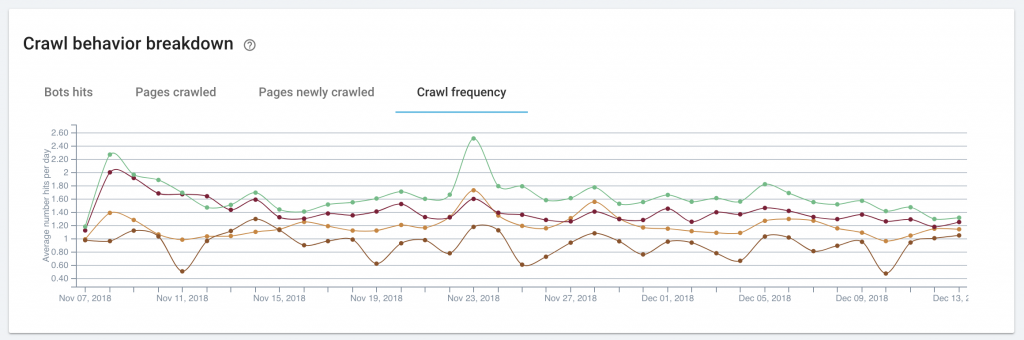

Viewing the number of googlebot hits per day for different page categories.

To avoid spending crawl budget on these pages, keep bots away from them. You can use redirections as well as directives aimed at bots to herd bots in a different direction.

Limit budget drains

Sometimes unexpected configurations can become a drain on crawl budget.

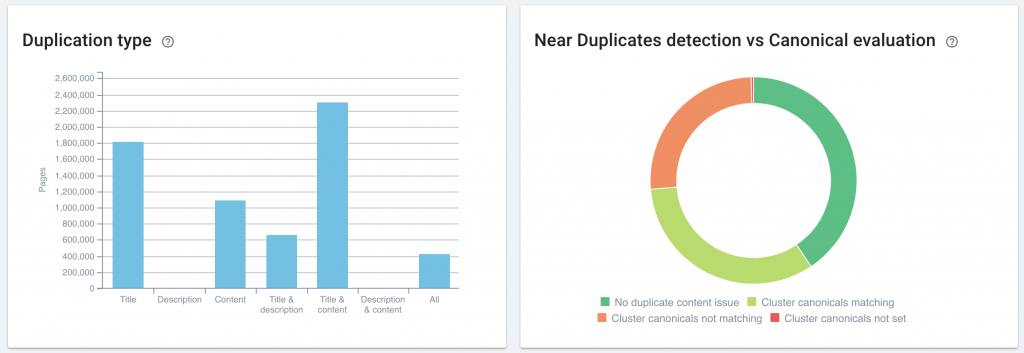

Google will spend twice as much budget when two similar pages point to different canonical URLs. In particular, if your site uses facets or queryString parameters, going over your canonical strategy can help you save on crawl budget. Tools like the canonical evaluations in OnCrawl can help make this task easier.

Tracking hits by googlebots on pages with similar content that do not declare a single canonical URL.

Using 302 redirects, which tell search engines that the content has been temporarily moved to a new URL, can also spend more budget than expected. Google will often return frequently to re-crawl pages with a 302 status in order to find out whether the redirect is still in place, or whether the temporary period is over.

Reduce investments with few returns

User traffic data, either from analytics sources such as Google Analytics or from server log data, can help pinpoint areas where you’ve been investing crawl budget with little return for your efforts in user traffic.

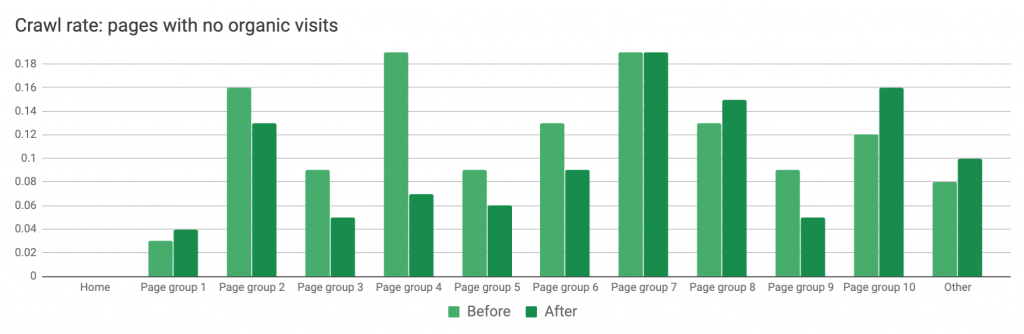

Examples of pages you might be over-investing in include URLs that rank in the first few pages of the SERPs but have never had organic traffic, newly crawled pages that take much longer than average to receive their first SEO visit, and frequently crawled pages that do not rank.

Crawl rate for pages that receive no organic visits in strategic page groups: before and after implementing improvements.

Returns on crawl budget investments

When you improve how crawl budget is spent on your site, you can see valuable returns:

- Reduced crawling of pages you don’t want to rank

- Increased crawling of pages that are being crawled for the first time

- Reduced time between publishing and ranking a page

- Improved crawl frequency for certain groups of page

- More effective impact of SEO optimizations

- Improved rankings

Some of these are direct effects of your crawl budget management, such as reduced crawling of pages you’ve told Google not to crawl. Others are indirect: for example, as your SEO work has more of an effect, your site’s authority and popularity increase, increasing your rankings.

In both cases, though, a healthy crawl budget is at the core of an effective SEO strategy.

OnCrawl

OnCrawl is a technical SEO platform that uses real data to help you make better SEO decisions. Interested in monitoring or improving your crawl budget, as well as other technical SEO elements, and in using a powerful platform with friendly support provided by experienced SEO experts? Ask them about their free trial at the Search LDN event on Monday, February 4th where they are headline sponsors.If you cannot make it for whatever reason, visit OnCrawl at www.oncrawl.com.